Optical Flow and Object Tracking for Robots

Skills Demonstrated

For our graduate-level computer vision course final project, I worked with Abbas Shikari to develop MarbleFlow, an innovative project aimed at developing an optical marble tracker using a mobile camera. The project involved creating a system where a camera mounted on a robot arm could track a ball's movement, enabling the arm to follow the ball based on camera input. Additionally, we implemented dense optical flow for estimating the camera's motion trajectory. Our project implemented two key computer vision algorithms:

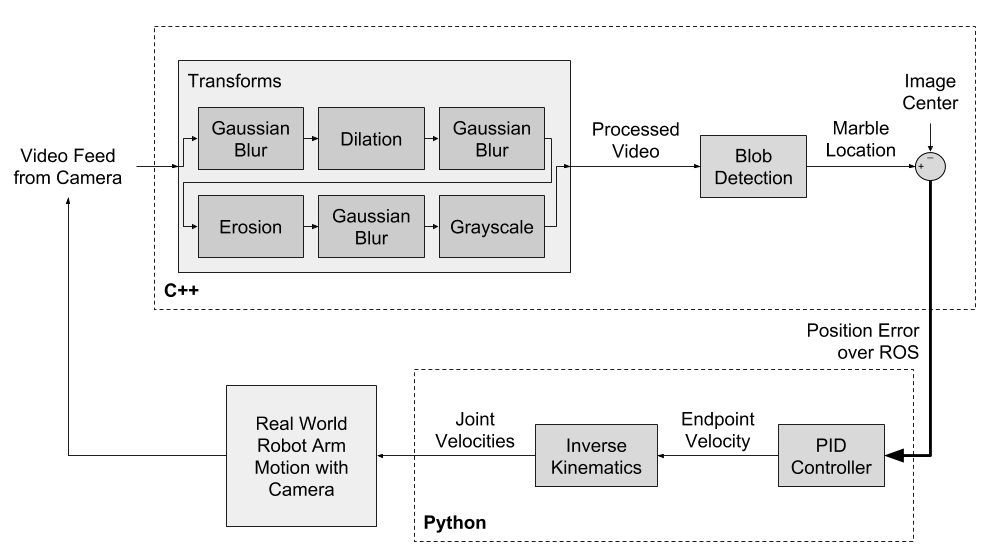

- Ball Tracking System: Leveraging threshold-based object detection, we calculated the 2D centroid position of a ball from a video feed. The system provided an error signal in x and y axes by comparing the centroid position with the image center, which was used for a velocity controller on the Sawyer robot arm.

- Dense Optical Flow Algorithm: Implemented on the full image, this algorithm averaged the dense optical flow to estimate the camera's velocity, allowing the recreation of the camera's estimated trajectory.

We wrote the computer vision algorithms in C++ using OpenCV and employed the ROS framework in Python to implement the robot PID controller and kinematics. The project successfully demonstrated the capability to track a ball and control a robotic arm based on visual inputs. The dense optical flow algorithm provided accurate camera motion estimates, enhancing the system's overall efficacy.

MarbleFlow was a rewarding educational experience that allowed us to deepen our understanding of fundamental computer vision algorithms and their practical applications. It provided us with hands-on experience in integrating hardware and software to solve a specific problem: tracking and following a moving object with a robotic arm. We are grateful for the opportunity to have worked on this project and are excited to apply the skills and knowledge we've gained in our future endeavors in the field of computer vision and robotics. Download our code and try it yourself on our GitHub repository.